.avif)

Rigorous Medical Imaging AI Evaluations

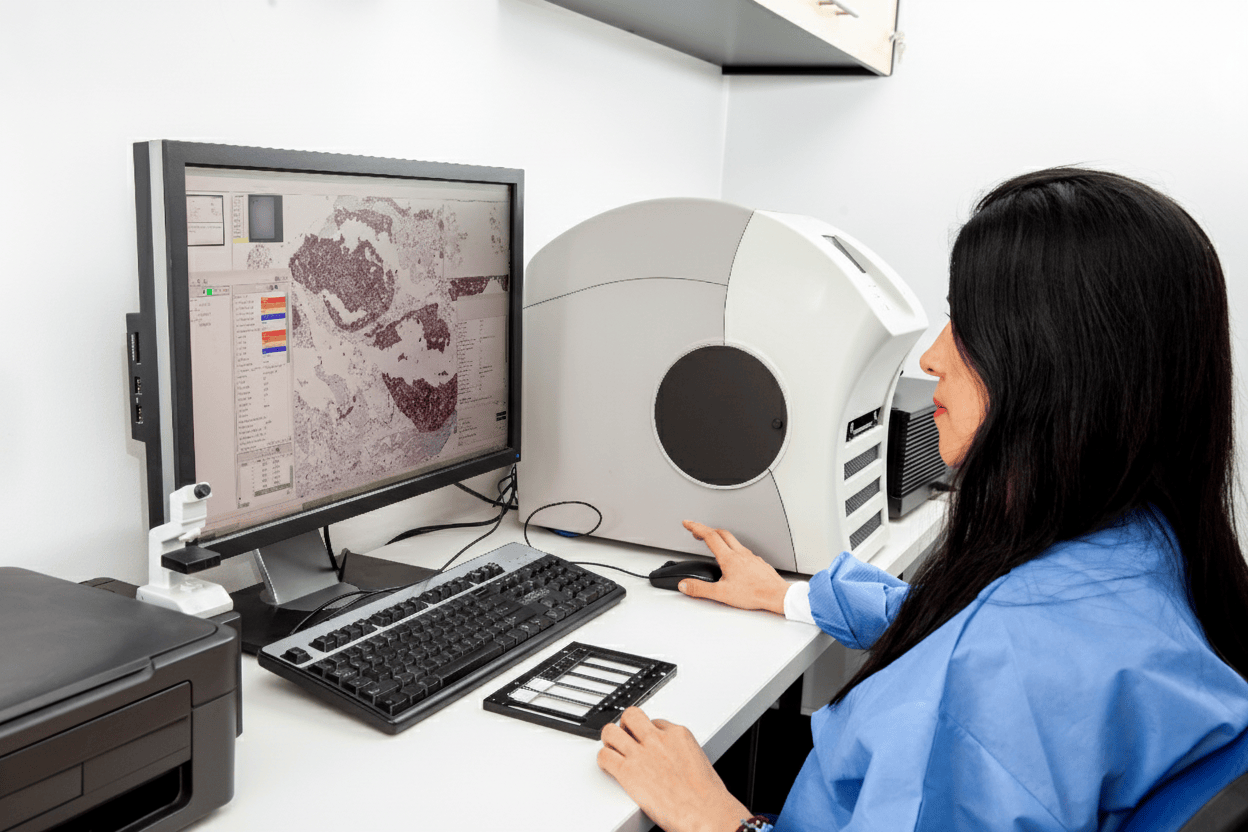

Bridge the gap between AI model claims and reliable clinical practice. Whether you’re evaluating vendor models for clinical workflows or building your own models for R&D, Tessel provides the independent validation and monitoring infrastructure pathologists, radiologists, and administrators need for confident medical imaging AI deployment.

Why medical imaging AI falls short of expectations

Despite great promise on benchmarks and case studies, AI adoption crawls forward at a frustrating pace in hospitals. The problem isn’t the technology. It’s the evaluation gap between research and reality.

Bias and reliability gaps

Generic model benchmarks don't reflect your hospital's unique characteristics. Scanners, staining protocols, lab workflows, and patient populations all create variability that cause models to fail under real-world conditions. When models degrade 10% on your hospital’s data, are they still clinically viable?

Interoperability

Medical imaging AI only creates value when it connects to daily workflows. New tools must integrate with IMS and LIS systems. Without seamless integration, results remain siloed and pathologists won't adopt them.

Compliance and economic sustainability

Without clear reimbursement pathways, industry AI solutions create unsustainable cost burdens. Administrators need proof of ROI, but lack the evaluation infrastructure to assess safety and value.

How Tessel helps hospitals deploy AI safely

Our mission is building trust in medical imaging AI through rigorous, independent validation that serves the entire healthcare ecosystem.

AI-ready data infra

Position your hospital for AI success with unified data infrastructure. Tessel connects directly to your existing IMS and LIS systems, adding an AI-ready data layer without requiring any changes to your established processes. This lightweight foundation enables model evaluation, internal AI development, research participation, and clinical trial integration - all built on top of what you already have.

Point-in-time evaluation

Independent testing on your hospital's actual data reveals true model performance. Our analysis goes beyond accuracy scores, using failure mode analysis and cutting-edge interpretability research to expose the internal concepts models rely on and where they fail.

We build a digital hypothesis for how your AI model behaves. The same reasoning process a pathologist uses when forming a diagnosis. Instead of trusting "89% performance on breast cancer cases," you'll understand the exact tissue structures the model struggles with and which scanner variations impact reliability.

Continuous monitoring

Tessel monitors model predictions in production, identifying drift and highlighting new failure modes as they emerge. When your operating procedures change (new staining protocols, scanner updates, workflow modifications), we catch performance degradation before it impacts patient care.

Unreliable predictions are automatically flagged for pathologist review. Clear reports help governance teams and administrators track reliability and ROI over time.

Benefits for every stakeholder

For pathologists

Gain confidence in AI reliability and understand exactly how AI will impact your workflow and patient outcomes. Compare AI reasoning to your own diagnostic process through mechanistic interpretability that reveals which biological features models actually use.

For administrators

Receive clear ROI analysis and cost justification data. Track performance metrics that matter for operational efficiency and patient care quality. Compare apples-to-apples when evaluating AI models and vendors to bring on.

.avif)

Collaborating with leading medical institutions

Our work is grounded in partnerships with top-tier academic medical centers and research institutions.

Already advancing medical imaging AI adoption

Through partnerships with academic medical centers, we're bridging the gap between research and clinical practice. Our recent case study on automatic label error detection shows how representation-level analysis catches data quality issues that undermine model reliability. Exactly the kind of hidden problems that erode trust with healthcare institutions.

A higher standard for medical AI

FDA and CE-IVDR approval demonstrate general safety, but regulatory trials can't capture every hospital's unique environment or enable direct vendor comparisons. Personalized medicine demands patient-specific treatment. AI deployment demands hospital-specific validation and head-to-head comparisons.

When it comes to patient care, we need higher standards.

.webp)